# How-to guides - File Streaming

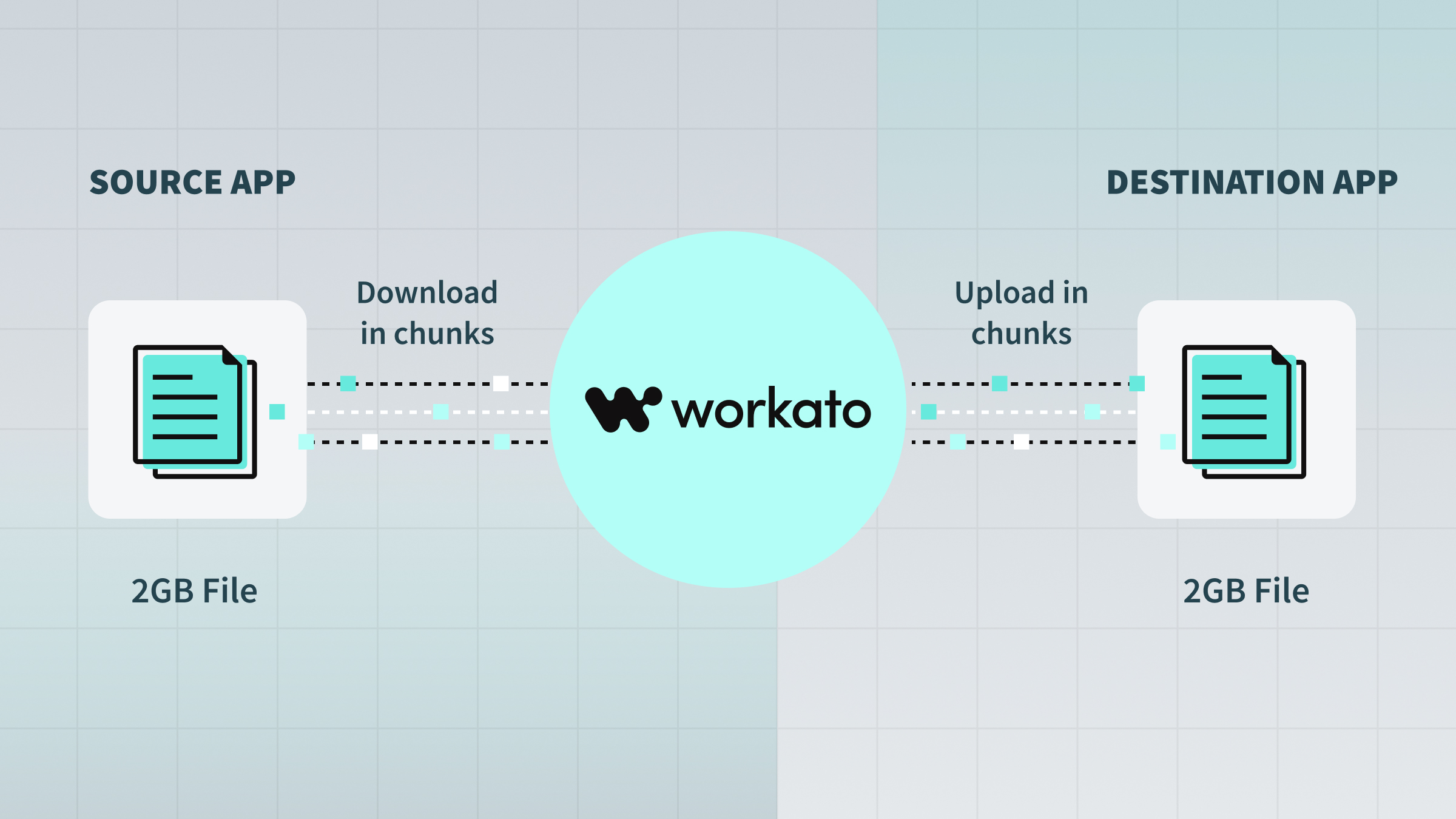

Utilizing Workato's file streaming library, you'll be able to build connectors that can transfer gigabytes of data between a source and destination. This is done by downloading a smaller chunk of the larger file, uploading that to the destination and looping over this process multiple times.

Many of Workato's standard platform connectors to common file storage locations have streaming enabled such as S3, Google Cloud Storage and Azure Blob. Find the full list here.

ACTION TIMEOUT

SDK actions have a 180 second timeout limit.

You can use the checkpoint! method with file streaming actions to transfer files that exceed the timeout limit. Refer to the Using our multistep framework to extend upload times section for additional information.

# Prerequisites

Downloading files The API should respect the HTTP RFC for Range headers (opens new window) which allows us to download a specific byte range of the file.

Uploading the file The API should support ways to upload a file in multiple discrete chunks. This could be via Content-Range headers (opens new window) or via any other form chunked uploads such as Azure's multipart upload with block IDs. (opens new window)

# Guides

The guides below details out the various ways to build file streaming actions depending on the API's capabilities:

- Download file via file streaming (Range headers)

- Upload file via file streaming (Content-Range headers)

- Upload file via file streaming (Chunk ID)

# What happens if your API does not meet the prerequisites?

If the API you work with does not allow for chunked uploads or downloads, you can still download and upload files in-memory but subject to limitations of both time and size. This is not recommended unless absolutely necessary.

Last updated: 5/22/2025, 4:42:29 AM