# Amazon S3 - New CSV file trigger

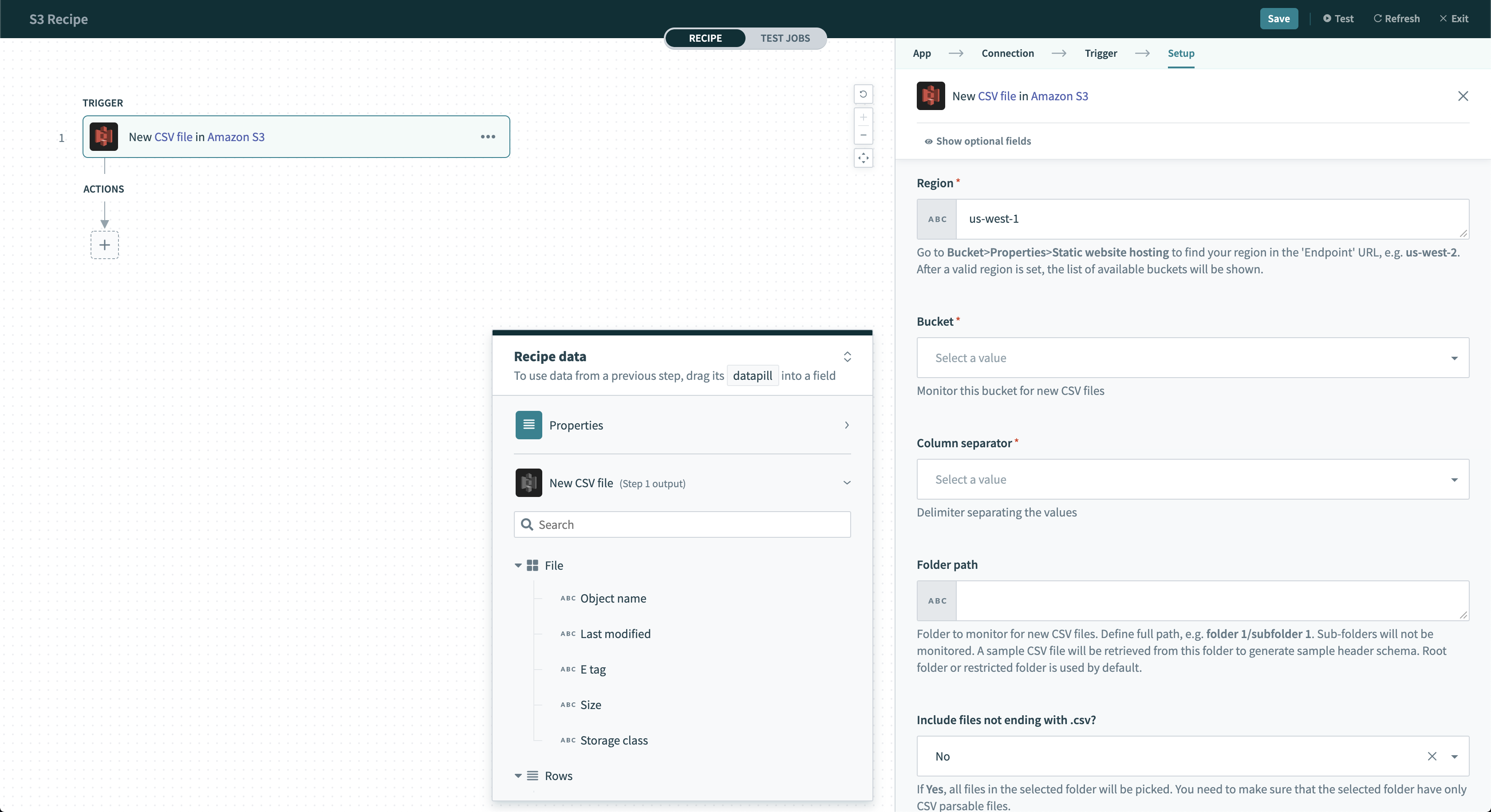

The New CSV file trigger monitors when a CSV file is added in a selected bucket or folder in Amazon S3.

Checks selected folder for new or updated CSV file once every poll interval. The output includes the file’s metadata and file contents, which are CSV rows delivered in batches.

Note that in Amazon S3, when you rename a file, it is considered a new file. When you upload a file and overwrite an existing file with the same name, it is considered an updated file but not a new file.

Amazon S3 - New CSV file trigger

Amazon S3 - New CSV file trigger

# Input

| Input field | Description |

|---|---|

| When first started, this recipe should pick up events from | Specify the time the recipe picks up CSV files created from when the recipe starts for the first time. You can't change this value after the recipe is run or tested. Learn more about this input field. |

| Region | Select the region of the bucket to monitor for new or updated files. For example, us-west-2. In Amazon S3, go to Bucket > Properties > Static website hosting to find your region in the Endpoint URL. |

| Bucket | Select or enter the bucket to monitor for new CSV file. You can select a bucket from the picklist or enter the bucket name directly. |

| Column separator | Enter the delimiter separating the columns in the CSV file. |

| Folder path | Select the folder to monitor for new CSV files. Define full path (for example, folder 1/subfolder 1). Sub-folders are not monitored. The default is the root folder or restricted folder. |

| Include files not ending with .csv? | Indicate how to handle any cases when your CSV files exported from other systems doesn't have the .csv extension. Ensure that all files in this folder are CSV parsable. |

| Column names | Enter the column names of the CSV file. You can manually define the column names with one column header per line. |

| Batch size | Define the number of CSV rows to process in each batch. The maximum is 1000 rows per batch. Workato divides the CSV file into smaller batches to process more efficiently. Use a larger batch size to increase data throughput. Sometimes, Workato automatically reduces batch size to avoid exceeding API limit. Refer to Batch Processing for more information. |

| Skip header row? | Select Yes if CSV file contains header row. Workato will not process that row as data. |

This trigger supports Trigger Condition, which allows you to filter trigger events.

# Output

| Output field | Description |

|---|---|

| Object name | The full name of the file. |

| Last modified | The last modified timestamp of the file. |

| Last modified | The last modified timestamp of the file. |

| E tag | The hash of the file object, generated by Amazon S3. |

| Size | The file size in bytes. |

| Storage class | The storage class (opens new window) of this file object. This is usually, S3 Standard. |

| Line | The number of this CSV row. |

| Columns | Contains all column values in this CSV row. You can use the nested datapills to map each column values. |

| List size | The number of rows in the CSV rows list. |

Last updated: 1/16/2026, 4:23:47 PM